Artificial intelligence has quickly moved from being a futuristic concept to a critical business tool. Today, nearly two-thirds of organisations are using generative AI for tasks like customer service chatbots or advanced data analytics. While AI offers many benefits, it also brings new risks and security challenges that cannot be ignored. To address this, AI TRiSM, a comprehensive framework for AI Trust, Risk, and Security Management, becomes essential. It helps organisations deploy AI solutions safely and sustainably, balancing innovation with risk mitigation and regulatory compliance.

AI TRiSM (Artificial Intelligence Trust, Risk, and Security Management) is a comprehensive framework developed by Gartner that ensures the governance, trustworthiness, fairness, reliability, robustness, efficacy, and data protection of AI models.

This framework helps organisations identify, monitor, and mitigate potential risks associated with AI technology implementation, while ensuring compliance with relevant regulations and data privacy laws.

AI TRiSM takes a structured approach to managing AI by focusing on three key areas.

Together, these components help organisations use AI safely and responsibly.

Businesses that put strong AI TRiSM frameworks in place see real benefits across their operations, security, and compliance efforts. In today’s AI-driven world, here’s why it’s so important:

The AI TRiSM framework stands on three essential pillars that work together to create a strong and trustworthy foundation for AI governance. Each pillar focuses on a key area, from building stakeholder confidence to protecting against new and evolving risks.

Trust is the cornerstone of successfully adopting and deploying AI in any organisation. This pillar aims to make AI systems transparent and understandable, giving everyone confidence through clear decision guidelines and open communication about how AI systems operate.

Building trust involves:

Managing risk means proactively identifying and addressing potential AI challenges before they impact operations. This pillar focuses on the many challenges AI presents, including biases in data, regulatory compliance, and technical vulnerabilities.

Effective risk management requires:

Security acts as a protective shield, safeguarding AI systems from threats both inside and outside the organisation. This pillar is about putting strong defences in place throughout the AI lifecycle.

Key security measures include:

According to Gartner, these are the key features that form the foundation of an effective AI TRiSM platform:

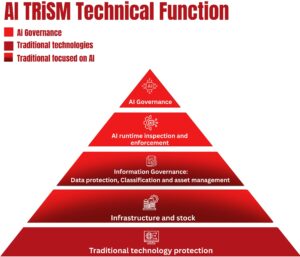

AI TRiSM is built on four key layers, with a fifth foundational layer made up of traditional tech tools like network, endpoint, and cloud security solutions.

This layer focuses on managing how AI is developed and used across the organisation. It involves multiple teams – from legal to engineering – and ensures that AI is:

It also supports audits, traceability, and compliance with frameworks like NIST AI RMF, ISO 42001, and the EU AI Act.

This layer monitors AI systems in real time, whether it’s a model, app, or agent, to:

This ensures that AI only accesses the right data, at the right time, with the right permissions. It includes:

The foundation layer includes the hardware, software, and deployment environments that run AI workloads. It focuses on:

Artificial Intelligence is reshaping industries, but its real value comes only when it is trusted, secure, and responsibly governed. AI TRiSM provides the guardrails businesses need to strike the right balance between innovation and risk management.

By integrating AI TRiSM into your security and governance strategy, you not only safeguard sensitive data and ensure compliance but also build the foundation for sustainable, future-ready innovation.

The message is clear: AI without trust is a liability. AI with TRiSM is a long-term asset.

The content of this blog has been informed by insights and definitions from leading industry sources, including Gartner, IBM, Splunk, and Proofpoint, along with additional perspectives from other industry analyses and our own understanding.